I'll be reviving http://cg-soft.com and also getting new business cards.

Too busy?

Tuesday, September 10, 2013

Saturday, September 7, 2013

I got the #AutoAwesome treatment

Google has something called #autoawesome, which looks at everything you upload and figures out something in common.

In this case it combined my graphics from the previous post into an animated gif. It worked out quite nicely:

In this case it combined my graphics from the previous post into an animated gif. It worked out quite nicely:

Thursday, September 5, 2013

Treating Environments Like Code Checkouts

In our business, the term "environment" is rather overloaded. It's used in so many contexts that just defining what an environment is can be challenging. Let's try to feel out the shape...

We are in business providing some sort of service. We write software to implement pieces of the service we're offering. This software needs to run someplace. That someplace is the environment.

In traditional shrink wrap software, the environment was often the user's desktop, or the enterprise's data center. The challenge then was to make our software be as robust as possible in hostile environments over which we had little control.

The next step on the evolutionary ladder was the appliance. Something we could control to some extent, but it still ends up sitting on a location and in a network outside our control.

The final step is software as a service, where we control all aspects of the hosts running our applications.

From a build and release perspective, what matters is what we can control. So it makes sense to define an environment as a set of hardware and software assembled for the purpose of running our applications and services.

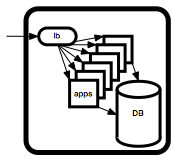

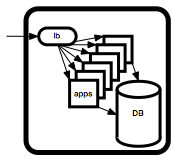

A typical environment for a web service might look like the diagram on the right.

So an environment is essentially:

When we develop new versions of our software, we would like to test it someplace before we inflict it on paying customers. One way to do that is the create environments in which the new version can be deployed and tested.

A big concern is how faithfully our testing environment will emulate the actual production environment. In practice, the answer varies wildly.

In the shrink wrap world, it is common to maintain a whole bank of machines and environments, hopefully replicating the majority of the setups used by our customers.

In the software as a service world, the issue is how well we can emulate our own production environment. For large scale popular services, this can be very expensive to do. There are also issues around replicating the data. Often, regulatory requirements and even common sense security requirements preclude making a direct copy of personally identifiable data.

This being said, modern virtualization and volume management addresses many of the issues encountered when attempting to duplicate environments. It's still not easy, but it is a lot easier than it used to be.

If you have the budget, it is very worthwhile to automate the creation of new environments as clones of existing ones at will. If you can at least achieve this for 5-7 environments, you can adopt an iterative refinement process, very similar to the way you manage code in version control systems.

We start by cloning our existing production environment to create a new release candidate environment. This one will evolve to become our next release.

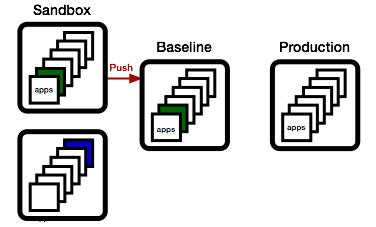

To update a set of apps, we first again clone the release candidate environment, creating a sandbox environment to test both the deploy process and the resulting functionality.

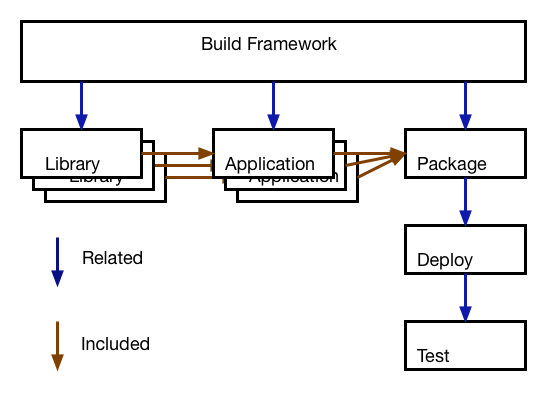

Then, we deploy one or more updated applications, freshly built from our continuous build service (aka Jenkins, TeamCity, or other).

The technical details on how the deploy happens are important, but not the central point here. I first want to highlight the workflow.

Now, let's suppose a different group is working on a different set of apps at the same time.

They will want to do the same: create their own sandbox environment by cloning the release candidate environment, then adding their new apps to it.

Meanwhile, QA has been busy, and the green app has been found worthy. As a consequence, we promote the sandbox environment to the release candidate environment.

We can do this because none of the apps in the current release candidate are newer than the apps in the sandbox.

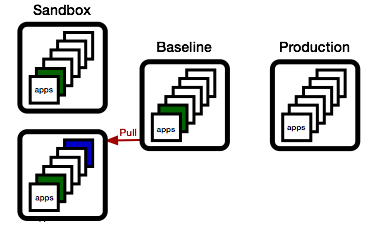

Now, if the blue team wishes to promote their changes, they first need to sync up. They do this by updating their sandbox with all apps in the baseline that are newer than the apps they have.

... and if things work out, they can then promote their state to the baseline.

This process should feel very familiar. It's nothing else but the basic change process as we know it from version control systems, just applied to environments:

We are in business providing some sort of service. We write software to implement pieces of the service we're offering. This software needs to run someplace. That someplace is the environment.

In traditional shrink wrap software, the environment was often the user's desktop, or the enterprise's data center. The challenge then was to make our software be as robust as possible in hostile environments over which we had little control.

The next step on the evolutionary ladder was the appliance. Something we could control to some extent, but it still ends up sitting on a location and in a network outside our control.

The final step is software as a service, where we control all aspects of the hosts running our applications.

From a build and release perspective, what matters is what we can control. So it makes sense to define an environment as a set of hardware and software assembled for the purpose of running our applications and services.

A typical environment for a web service might look like the diagram on the right.

So an environment is essentially:

Hardware

OS

Third Party Tools

Our Apps

+ Configuration

====================

Environment

In the shrink wrap world, it is common to maintain a whole bank of machines and environments, hopefully replicating the majority of the setups used by our customers.

In the software as a service world, the issue is how well we can emulate our own production environment. For large scale popular services, this can be very expensive to do. There are also issues around replicating the data. Often, regulatory requirements and even common sense security requirements preclude making a direct copy of personally identifiable data.

This being said, modern virtualization and volume management addresses many of the issues encountered when attempting to duplicate environments. It's still not easy, but it is a lot easier than it used to be.

If you have the budget, it is very worthwhile to automate the creation of new environments as clones of existing ones at will. If you can at least achieve this for 5-7 environments, you can adopt an iterative refinement process, very similar to the way you manage code in version control systems.

We start by cloning our existing production environment to create a new release candidate environment. This one will evolve to become our next release.

To update a set of apps, we first again clone the release candidate environment, creating a sandbox environment to test both the deploy process and the resulting functionality.

Then, we deploy one or more updated applications, freshly built from our continuous build service (aka Jenkins, TeamCity, or other).

The technical details on how the deploy happens are important, but not the central point here. I first want to highlight the workflow.

Now, let's suppose a different group is working on a different set of apps at the same time.

They will want to do the same: create their own sandbox environment by cloning the release candidate environment, then adding their new apps to it.

Meanwhile, QA has been busy, and the green app has been found worthy. As a consequence, we promote the sandbox environment to the release candidate environment.

We can do this because none of the apps in the current release candidate are newer than the apps in the sandbox.

Now, if the blue team wishes to promote their changes, they first need to sync up. They do this by updating their sandbox with all apps in the baseline that are newer than the apps they have.

... and if things work out, they can then promote their state to the baseline.

... and if the baseline meets the release criteria, we push to production.

Note that at this point, the baseline matches production, and we can start the next cycle, just as described above.This process should feel very familiar. It's nothing else but the basic change process as we know it from version control systems, just applied to environments:

- We establish a baseline

- We check out a version

- We modify the version

- We check if someone else modified the baseline

- If yes, we merge from the baseline

- We check in our version.

- We release it if it's good.

Tuesday, August 20, 2013

Dear Reader...

Hello there!

There aren't that many of you, so thanks to those who took the time to come.

I've been wondering what I can do to make this more interesting. Looking at the stats, it does appear that meta-topics are most popular:

That is interesting, since I would have thought that more detailed "how to" articles would be better.

Another thing I wonder about is the lack of direct response. Partly, that's because there just aren't that many folks interested in the topic, but maybe it's also the presentation.

I find that the most difficult part of my job isn't the technical aspect, it's the communication aspect. I often oscillate between saying things that are obvious and trivial, and saying things that have more controversy hidden that it appears at first, and people often only realize at implementation time what the ultimate effect of a choice is.

An important motivation for this blog is to practice finding the right level, so feedback is always appreciated.

... and yes, I need to post more stuff ...

There aren't that many of you, so thanks to those who took the time to come.

I've been wondering what I can do to make this more interesting. Looking at the stats, it does appear that meta-topics are most popular:

Another thing I wonder about is the lack of direct response. Partly, that's because there just aren't that many folks interested in the topic, but maybe it's also the presentation.

I find that the most difficult part of my job isn't the technical aspect, it's the communication aspect. I often oscillate between saying things that are obvious and trivial, and saying things that have more controversy hidden that it appears at first, and people often only realize at implementation time what the ultimate effect of a choice is.

An important motivation for this blog is to practice finding the right level, so feedback is always appreciated.

... and yes, I need to post more stuff ...

Sunday, July 21, 2013

Major Version Numbers can Die in Peace

Here's a nice bikeshed: should the major version number go into the package name or into the version string?

A very nice explanation of how this evolved: http://unix.stackexchange.com/questions/44271/why-do-package-names-contain-version-numbers

Even though it is theoretically possible to install multiple versions of the same package on the same host, in practice this adds complications. So even though rpm itself will happily install multiple versions of the same package, most higher level tools (like yum, or apt), will not behave nicely. Their upgrade procedures will actively remove all older versions whenever a newer one is installed.

The common solution is to move the major version into the package name itself. Even though this is arguably a hack, it does express the inherent quality of incompatibility when doing a major version change to a piece of software.

According to the semantic versioning spec, a major version change:

Note that you can make different choices. A very common choice is to maintain a policy of always preserving compatibility to the previous version. This gives every consumer the time to upgrade.

In either case, there is no need to keep the major version number in the version string. If you're a good citizen and always maintain backwards compatibility, your major version is stuck at "1" forever, not adding anything useful - and if you change the package name whenever you do break backwards compatibility, you don't need it anyways...

So, how about finding a nice funeral home for the major version number?

A very nice explanation of how this evolved: http://unix.stackexchange.com/questions/44271/why-do-package-names-contain-version-numbers

Even though it is theoretically possible to install multiple versions of the same package on the same host, in practice this adds complications. So even though rpm itself will happily install multiple versions of the same package, most higher level tools (like yum, or apt), will not behave nicely. Their upgrade procedures will actively remove all older versions whenever a newer one is installed.

The common solution is to move the major version into the package name itself. Even though this is arguably a hack, it does express the inherent quality of incompatibility when doing a major version change to a piece of software.

According to the semantic versioning spec, a major version change:

Major version X (X.y.z | X > 0) MUST be incremented if any backwards incompatible changes are introduced to the public APIA new major version will break non-updated consumers of the package. That's a big deal. In practice, you will not be able to perform a major version update without some form of transition plan, during which both the old and the new version must be available.

Note that you can make different choices. A very common choice is to maintain a policy of always preserving compatibility to the previous version. This gives every consumer the time to upgrade.

In either case, there is no need to keep the major version number in the version string. If you're a good citizen and always maintain backwards compatibility, your major version is stuck at "1" forever, not adding anything useful - and if you change the package name whenever you do break backwards compatibility, you don't need it anyways...

So, how about finding a nice funeral home for the major version number?

Friday, July 5, 2013

What's a Release?

I recently stumbled over the ITIL (Information Technology Infrastructure Library), a commercial IT certification outfit which charges 4 figure fees for a variety of "training modules", with a dozen or so required to earn you a "master practitioner" title...

Their definition of a release is:

Obviously, when viewing a succession of releases, it makes a lot of sense to talk about deltas and changes, but that is a consequence of producing many releases and not part of the definition of a single release.

A release is a snapshot, not a process. The question should be: "what is it that distinguishes the specific snapshot we call a release from any other snapshot taken from our software development history?"

The main point is that the release snapshot has been validated. A person or a set of people made the call and said it was good to go. That decision is recorded, and the responsibility for both the input into the decision and the decision itself can be audited.

In theory, every release should be considered as a whole. In practice, performing complete regression testing on every release is likely to be way too expensive for most shops. This is the reason for emphasizing the incremental approach in many release processes.

There is nothing wrong with making this optimization as long as everyone does it in full awareness that it really is a shortcut. Release managers need to consider the following risk factors when making this tradeoff between speed and precision:

The ITIL discussion about packaging really means that a release is published, released out of the direct control of the stakeholders.

Incorporating these two facets results in my version of the definition of a release as:

Their definition of a release is:

A Release consists of the new or changed software and/or hardware required to implement approved changes. Release categories include:Even though this definition does pretty much represent a consensus impression of the nature of a release, I think the definition, besides being too focused on implementation details, places too much emphasizes on "change".Releases can be divided based on the release unit into:

- Major software releases and major hardware upgrades, normally containing large amounts of new functionality, some of which may make intervening fixes to problems redundant. A major upgrade or release usually supersedes all preceding minor upgrades, releases and emergency fixes.

- Minor software releases and hardware upgrades, normally containing small enhancements and fixes, some of which may have already been issued as emergency fixes. A minor upgrade or release usually supersedes all preceding emergency fixes.

- Emergency software and hardware fixes, normally containing the corrections to a small number of known problems.

- Delta release: a release of only that part of the software which has been changed. For example, security patches.

- Full release: the entire software program is deployed—for example, a new version of an existing application.

- Packaged release: a combination of many changes—for example, an operating system image which also contains specific applications.

Obviously, when viewing a succession of releases, it makes a lot of sense to talk about deltas and changes, but that is a consequence of producing many releases and not part of the definition of a single release.

A release is a snapshot, not a process. The question should be: "what is it that distinguishes the specific snapshot we call a release from any other snapshot taken from our software development history?"

The main point is that the release snapshot has been validated. A person or a set of people made the call and said it was good to go. That decision is recorded, and the responsibility for both the input into the decision and the decision itself can be audited.

In theory, every release should be considered as a whole. In practice, performing complete regression testing on every release is likely to be way too expensive for most shops. This is the reason for emphasizing the incremental approach in many release processes.

There is nothing wrong with making this optimization as long as everyone does it in full awareness that it really is a shortcut. Release managers need to consider the following risk factors when making this tradeoff between speed and precision:

- Do I really know all the dependencies in order to evaluate the effect of a single change?

- Do I really understand all the side effects?

- Do all the stakeholders understand and agree with the risk assessment?

The ITIL discussion about packaging really means that a release is published, released out of the direct control of the stakeholders.

Incorporating these two facets results in my version of the definition of a release as:

A validated snapshot published to a point of production with a commitment by the stakeholders to not roll back.I think this captures the essence better:

- A release is a state, not a change.

- A release represents a commitment by the stakeholders.

- A release is published, which really means it has escaped the from the control of the stakeholders. Outsiders will see what is, and there is nothing the stakeholders can do about it.

Saturday, June 1, 2013

Mission Creep

I just discussed a simple perishable lock service, and of course the usual thing happens: mission creep.

It turns out QA wants to enforce "code freeze", aka no more deploys to certain test environments where QA is doing their thing.

At first, besides being a reasonable requests, it is amazingly easy to add on to the code. Instead of setting the expiration date to "now", we set it to some user specified time, possible way into the future.

But we are planting the seeds of doom....

Remember the initial assumptions of "perishable" locks... that is they are perishable.

In other words, we assume that the lock requester (usually a build process) is the weak link in the chain, and is more likely to die than the lock manager. And since ongoing builds essentially act as a watchdog for the lock manager (i.e. if builds fail because the lock manager crashed, folks will most certainly let me know about it), I can be relatively lax and skimp on things like persisting the queue state. If the lock manager crashes, so what: some builds will fail, somebody is going to complain, I fix and restart the service, done.

But now suddenly, QA will start placing locks with an expiration date far into the future. Now, if the lock manager crashes, it's not obvious anyone will notice immediately. Even if someone notices, it's not clear they will be aware of the state it was in prior to the crash, so there is a real risk of invalidating weeks of QA work.

So, what am I to do?

So what would you do?

It turns out QA wants to enforce "code freeze", aka no more deploys to certain test environments where QA is doing their thing.

At first, besides being a reasonable requests, it is amazingly easy to add on to the code. Instead of setting the expiration date to "now", we set it to some user specified time, possible way into the future.

But we are planting the seeds of doom....

Remember the initial assumptions of "perishable" locks... that is they are perishable.

In other words, we assume that the lock requester (usually a build process) is the weak link in the chain, and is more likely to die than the lock manager. And since ongoing builds essentially act as a watchdog for the lock manager (i.e. if builds fail because the lock manager crashed, folks will most certainly let me know about it), I can be relatively lax and skimp on things like persisting the queue state. If the lock manager crashes, so what: some builds will fail, somebody is going to complain, I fix and restart the service, done.

But now suddenly, QA will start placing locks with an expiration date far into the future. Now, if the lock manager crashes, it's not obvious anyone will notice immediately. Even if someone notices, it's not clear they will be aware of the state it was in prior to the crash, so there is a real risk of invalidating weeks of QA work.

So, what am I to do?

- Ignore the problem (seriously: there is a risk balance that can be theoretically computed: the odds of a lock manager crash (which increases, btw, if you add complexity) vs the cost of QA work lost).

- Implement persistence of the state (which suddenly adds complexity and increases the probability of failure - simplest example being: "out of disk space")

- Pretend QA is just another build, and maintain a keep-alive process someplace.

So what would you do?

Wednesday, May 29, 2013

Annotating Git History

As is well known, git history is sacred. There are good reasons for it, but it does make it difficult sometimes to correct errors and integrate git into larger processes.

For example, it is very common to include references to issue tracking systems in git commit comments. It would be nice if one could mine this information from git in order to update the issues automatically, for example at build time or at deploy time.

Unfortunately, we're all human and make mistakes. Even if you have validation hooks and do the best possible effort, invalid references or forgotten references make it difficult to rely on the commit comments.

It would be nice if it was easier to fix old git commit comments, but history being immutable is one of the core concepts baked into git, and that's a good thing. So I went for a different solution.

How about attaching annotated git tags to commits? That would preserve the history while adding annotations with newer information. The only trouble with this is that the current git commands aren't very good at revealing this information to casual git users.

The convention I currently use is quite simple: attach a tag whose name begins with CANCEL to the commit to be updated.

Let's say we wish to correct this commit:

And what if there is another error in the annotation? Simply add another tag with the correction. The number in red above is the unix timestamp expressed as seconds since 1970, so you can sort the annotations by time and just take the latest.

By the way, if anyone knows a nicer way to show all tags attached to a specific commit, please let me know.

For example, it is very common to include references to issue tracking systems in git commit comments. It would be nice if one could mine this information from git in order to update the issues automatically, for example at build time or at deploy time.

Unfortunately, we're all human and make mistakes. Even if you have validation hooks and do the best possible effort, invalid references or forgotten references make it difficult to rely on the commit comments.

It would be nice if it was easier to fix old git commit comments, but history being immutable is one of the core concepts baked into git, and that's a good thing. So I went for a different solution.

How about attaching annotated git tags to commits? That would preserve the history while adding annotations with newer information. The only trouble with this is that the current git commands aren't very good at revealing this information to casual git users.

The convention I currently use is quite simple: attach a tag whose name begins with CANCEL to the commit to be updated.

Let's say we wish to correct this commit:

% git log -1 5a434aAttach the tag, like this:

commit 5a434ac808d9a5c10437b45b06693cf03227f6b3

Author: Christian Goetze <cgoetze@miaow.com>

Date: Wed May 29 12:57:57 2013 -0700

TECHOPS-218 Render superceded messages.

% git tag -m "new commit message" CANCEL_5a434a 5a434aNow, the build and deploy scripts can mine this information. They start by listing all the tags attached to the commit:

% git show-ref --tags -d \Then, for every tag, we check whether it's an annotated tag:

| grep '^'5a434a \

| sed -e 's,.* refs/tags/,,' -e 's/\^{}//'

% git describe --exact-match CANCEL_5a434aNote that this command fails if it's a "lightweight" tag. Once you know it's an annotated tag, you can run:

CANCEL_5a434a

% git cat-file tag CANCEL_5a434aThis is easy to parse, and can be used as a replacement for the commit comment.

object 5a434ac808d9a5c10437b45b06693cf03227f6b3

type commit

tag CANCEL_5a434a

tagger Christian Goetze <cgoetze@miaow.com> 1369865583

new commit message

And what if there is another error in the annotation? Simply add another tag with the correction. The number in red above is the unix timestamp expressed as seconds since 1970, so you can sort the annotations by time and just take the latest.

By the way, if anyone knows a nicer way to show all tags attached to a specific commit, please let me know.

Friday, May 17, 2013

Reinventing a Wheel: Hierarchical Perishable Locks

I've written before about the perennial build or buy dilemma, and here it is again.

I was desperate for a solution to the following kinds of problems:

Ideally, it should be a hierarchical locking mechanism to support controlled sharing for concurrent tasks, while still being able to lock the root resource.

If you use naive methods, you are likely to get hurt in several ways:

I'm sure that the moment I hit publish, I'll locate the original wheel hiding in plain sight someplace. Maybe some kind reader will reveal it for me...

Meanwhile, I have something that works.

The basic idea is simple:

If the lock holder reaches the front of both queues, then the grab request will succeed, and the task can proceed.

If another task comes along and requests:

Now, if the big database cleanup task comes along, it will just say:

Should one of the test tasks decide to give it a try, they will again end up requesting a shared lock on mydatabase. Since a shared lock cannot be merged with an exclusive lock, the new shared lock will be queued after the exclusive lock, and the test task will have to wait until the cleanup task is done. Fairness is preserved, and it appears that we have all the right properties....

Code is here - please be gentle :)

I was desperate for a solution to the following kinds of problems:

- I have a bunch of heavyweight tests running in parallel, but using the same database. These tests unfortunately tend to create a mess, and every once in a while the database needs to be reset. During the reset, no access by automated tests should be permitted.

- Integration and UI tests run against a deployed version of the software. Most of these tests can be run concurrently, but not all - but more importantly, none of those tests will deliver a reliable result if a new deploy yanks away the services while a test against them is running. (yes, I know, they should be "highly available", but they aren't, at least not on those puny test environments).

Ideally, it should be a hierarchical locking mechanism to support controlled sharing for concurrent tasks, while still being able to lock the root resource.

If you use naive methods, you are likely to get hurt in several ways:

- Builds are fickle. They fail, crash or get killed by impatient developers. Any mechanism that requires some sort of cleanup action as part of the build itself will not make you very happy.

- Developers have a strong sense of fairness and will get impatient if some builds snag a lock right out from under their nose. So we need a first come first serve queuing mechanism.

- If you use touch files with timestamps or some similar device, you run up against the lack of an atomic "test and set" operation, and also run the risk of creating a deadlock.

I'm sure that the moment I hit publish, I'll locate the original wheel hiding in plain sight someplace. Maybe some kind reader will reveal it for me...

Meanwhile, I have something that works.

The basic idea is simple:

- My services keeps a hash (or dict) of queues.

- Every time someone requests a resource, the queue for that resource is either created from scratch, or, if it already exists, searched for an entry by the same requester.

- If the request is already in the queue, a timestamp in the request is updated, otherwise the the request is added at the end of the queue.

- If the request is first in line, we return "ok", otherwise we return "wait".

- In regular intervals, we go through all queues and check if the head item has timed out. If yes, we remove it, and check the next item, removing all the dead items until we find an unexpired one, or until the queue is empty.

- If a request to release an resource comes in, we check the queue associated with the resource and set the timestamp to zero. This will cause the request to be automatically purged when it comes up.

- The event loop model ensures my code "owns" the data while processing a request. No shared memory or threading issues.

- The requests and responses are very short, so no hidden traps triggered by folks submitting megabyte sized PUT requests .

- The queues tend to be short, unless you have a big bottleneck, which really means you have other problems. This means that processing the requests is essentially constant time. A large number of resources is not really a problem, since it is easy to shard your resource set.

- We introduce shared vs exclusive locks. Shared locks have many owners, each with their own timestamp. When enqueuing a shared lock, we first check if the last element in the queue is also a shared lock, and if yes, we merge it instead of adding a new lock to the queue.

- We introduce resource paths, and request an exclusive lock for the last element in the path, but shared locks for all the parent elements.

grab mydatabase/mytestThis will create a shared lock on mydatabase, and an exclusive lock on mydatabase/mytest.

If the lock holder reaches the front of both queues, then the grab request will succeed, and the task can proceed.

If another task comes along and requests:

grab mydatabase/yourtestthen the shared lock request for mydatabase is merged, and that task will also be at the head of the queues for all of its resources, and can proceed.

Now, if the big database cleanup task comes along, it will just say:

grab mydatabaseSince mydatabase here is at the end of the resource path, it will request an exclusive lock which will not be merged with the previous ones, but queued after them. The cleanup task will have to wait until all of the owners of the shared lock release their part, and only then can it proceed.

Should one of the test tasks decide to give it a try, they will again end up requesting a shared lock on mydatabase. Since a shared lock cannot be merged with an exclusive lock, the new shared lock will be queued after the exclusive lock, and the test task will have to wait until the cleanup task is done. Fairness is preserved, and it appears that we have all the right properties....

Code is here - please be gentle :)

Monday, May 6, 2013

Why is it so hard to collect release notes (part III)

Moving right along (see parts one and two), we were using a simple system with two entry points and one piece of shared code.

We represent the state using a build manifest, expressed as a JSON file:

{ "Name": "January Release",

"Includes: [{ "Name": "Foo.app",

"Rev": "v1.0",

"Includes": [{ "Name": "common",

"Rev": "v1.0" }]},

{ "Name": "Bar.app",

"Rev": "v1.0",

"Includes": [{ "Name": "common",

"Rev": "v1.0" }]}]}

After some work, both the Foo team and the Bar team made some independent modifications, each of which made their end happy.

Since we're always in a hurry to release, we defer merging the common code, and release what we have instead. Now we would like to know what changed since the initial state.

First, we construct our new build manifest:

{ "Name": "January Release",In the previous post of the series, we cheated by only modifying one endpoint at a time, and leaving the other one unchanged. Now we change both, and it turns out the answer depends on how you ask the question:

"Includes: [{ "Name": "Foo.app",

"Rev": "v2.0",

"Includes": [{ "Name": "common",

"Rev": "v2.0" }]},

{ "Name": "Bar.app",

"Rev": "v1.1",

"Includes": [{ "Name": "common",

"Rev": "v1.1" }]}]}

Bar.app: git log ^v1.0 v1.1 # in Bar.app

git log ^v1.0 v1.1 # in common

Foo.app: git log ^v1.0 v2.0 # in Foo.app

git log ^v1.0 v2.0 # in common

whole: git log ^v1.0 v1.1 # in Bar.appWhy bring this up? Well, in the previous post I made an argument that it is not only convenient, but necessary to combine the start points of the revision ranges used to get the changes. The same is not true for the end points, as we cannot by any means claim that v2.0 of common is actually in use by Bar.app.

git log ^v1.0 v2.0 # in Foo.app

git log ^v1.0 v1.1 v2.0 # in common

So, in order to preserve the ability to answer the question "what changed only in Bar.app", as opposed to the whole system, we need to keep the log output separate for each endpoint.

Now we are pretty close to an actual algorithm to compare two build manifests:

- Traverse the old manifest and register the start points for every repo

- Traverse the new manifest and register the end points for every repo, and which entry point uses the end points.

- Traverse all registered repos, and for every registered endpoint, run:

git log ^startpoint1 ^startpoint2 ... endpoint

and register the commits and the entry points to which they belong.

Tuesday, April 30, 2013

Version Numbers in Code Considered Harmful

Time for me to eat crow. Some time ago, I wrote a post titled "Version Numbers in Branch Names Considered Harmful". I'm changing my mind about this, and it's largely due to the way revision control systems have changed over time.

Specifically, in git and mercurial, branches are no longer heavy weight items. In fact, they hardly exist at all until one actually makes a change, so creating branches in and itself is not such a big deal, whereas in Perforce and Subversion, creating branches is a huge deal, and there is a strong motivation to avoid doing it.

Instead, encoding version information in the branch names turns out to be a pretty good technique, since it allows me to remove any hard coded version strings from the code itself. This turns out to have some wonderful properties in our new "agile" world of fast paced incremental releases.

In my previous post about compiling release notes, I showed how build manifests can be used to create a list of revisions involved in a build. Now the big trick is that in git and in mercurial, the mere act of creating a branch does not touch anything in the revision graph.

This means that if we use the git revision hashes as our source of truth, we can detect whether something really changed, or whether we just rebuilt the same thing, but with a different branch name, and hence a different version number.

A crucial element to make this work is to ensure the build system injects the current version number at build time, usually by extracting it from the branch name.

Doing this allows a development team to keep branching all repositories as new releases come out, but the branches only matter if something changes. All you need to communicate is the current release version number, and developers know that for any change in any repository, they should use the branch associated with it.

When a release occurs, all you need to do is compare the hashes of the previous release with the current one, and only release the pieces that changed. No thinking required, no risk of rebuilds of essentially unchanged items, it just works.

Now, how the dependencies of those items should be managed at build time is a much more interesting question, with lots of potential for bikeshed.

Specifically, in git and mercurial, branches are no longer heavy weight items. In fact, they hardly exist at all until one actually makes a change, so creating branches in and itself is not such a big deal, whereas in Perforce and Subversion, creating branches is a huge deal, and there is a strong motivation to avoid doing it.

Instead, encoding version information in the branch names turns out to be a pretty good technique, since it allows me to remove any hard coded version strings from the code itself. This turns out to have some wonderful properties in our new "agile" world of fast paced incremental releases.

This means that if we use the git revision hashes as our source of truth, we can detect whether something really changed, or whether we just rebuilt the same thing, but with a different branch name, and hence a different version number.

A crucial element to make this work is to ensure the build system injects the current version number at build time, usually by extracting it from the branch name.

Doing this allows a development team to keep branching all repositories as new releases come out, but the branches only matter if something changes. All you need to communicate is the current release version number, and developers know that for any change in any repository, they should use the branch associated with it.

When a release occurs, all you need to do is compare the hashes of the previous release with the current one, and only release the pieces that changed. No thinking required, no risk of rebuilds of essentially unchanged items, it just works.

Now, how the dependencies of those items should be managed at build time is a much more interesting question, with lots of potential for bikeshed.

Saturday, April 20, 2013

Why is it so hard to collect release notes (part II)

I've written before about this subject. It turns out that in practice it is quite difficult to precisely list all changes made to large scale piece of software, even with all the trappings of modern revision control systems available.

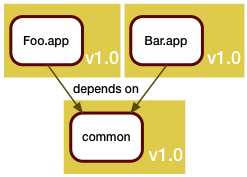

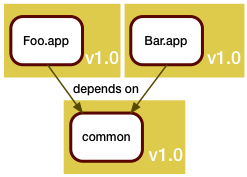

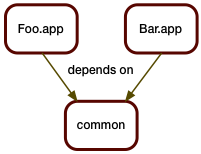

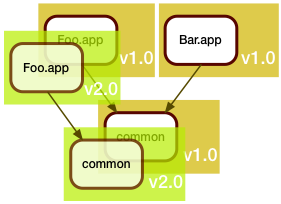

To demonstrate the challenges involved with modern "agile" processes, let's take a relatively simple scenario: two applications or services, each depending on a piece of shared code.

To demonstrate the challenges involved with modern "agile" processes, let's take a relatively simple scenario: two applications or services, each depending on a piece of shared code.

In the good old days, this was somewhat of a no-brainer. All pieces would live in the same source tree, and be built together.

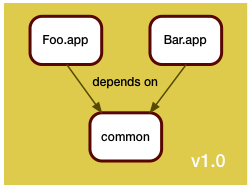

A single version control repository would be used, and a release consists of all components, built once.

A new release consists of rebuilding all pieces and delivering them as a unit. So finding the difference between two releases was a pure version control system operation, and no additional thinking was required.

The challenge in the bad old days was that every single one of your customers probably had a different version of your system, and was both demanding in fixing their bugs, but refusing to upgrade to your latest release. Therefore you had to manage lots of patch branches.

The challenge in the bad old days was that every single one of your customers probably had a different version of your system, and was both demanding in fixing their bugs, but refusing to upgrade to your latest release. Therefore you had to manage lots of patch branches.

Still, everything was within the confines of a single version control repository, and figuring out the deltas between two releases was essentially running:

The mantra here is: if it ain't broke, don't fix it. As we've seen before, rebuilding and re-releasing an unchanged component already can have risks, and forcing a working component to be rebuilt because of unrelated changes will at least cause delay.

The mantra here is: if it ain't broke, don't fix it. As we've seen before, rebuilding and re-releasing an unchanged component already can have risks, and forcing a working component to be rebuilt because of unrelated changes will at least cause delay.

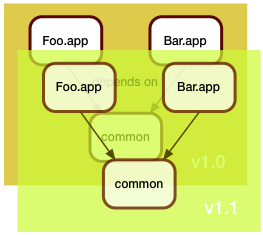

So, to support the new world, we split our single version control repository into separate repositories, one for each component. The components get built separately, and can be released separately.

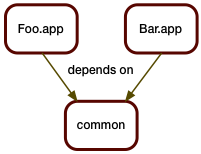

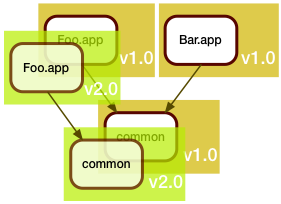

In this example, Foo.app got updated. This update unfortunately also required a fix to the shared code library. Arguably, Bar.app should be rebuilt to see if the fix broke it, but we are in a hurry (when are we ever not in a hurry?), and since Bar.app is functioning fine as is, we don't rebuild it. We just leave it alone.

In this example, Foo.app got updated. This update unfortunately also required a fix to the shared code library. Arguably, Bar.app should be rebuilt to see if the fix broke it, but we are in a hurry (when are we ever not in a hurry?), and since Bar.app is functioning fine as is, we don't rebuild it. We just leave it alone.

As we deploy this to production, we realize we now have two different versions of common there.

That in itself is usually not a problem if we build things right, for example by using "assemblies", using static linking, or just paying attention to the load paths.

I sometimes joke that the first thing everyone does in a C++ project is to override new. The first thing everyone does in a Java project is to write their own class loader. This scenario explains why.

But with this new process, answering the question of "What's new in production?" is no longer a simple version control operation. For one, there isn't a single repository anymore - and then the answer depends on the service or application you're examining.

In this case, the answer would be:

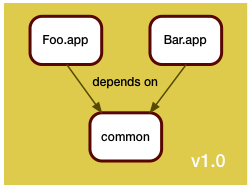

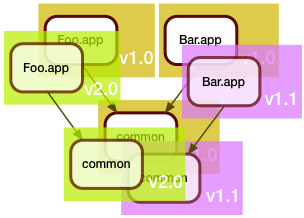

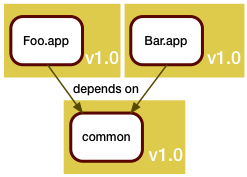

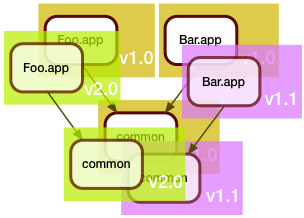

Now the development team around Bar.app wasn't idle during all this time, and they also came up with some changes. They too needed to update the shared code in common.

Now the development team around Bar.app wasn't idle during all this time, and they also came up with some changes. They too needed to update the shared code in common.

Of course, they also were in a hurry, and even though they saw that the Foo.app folks were busy tweaking the shared code, they decided that a merge was too risky for their schedule, and instead branched the common code for their own use.

When they got it all working, the manifest of the February release looked like this:

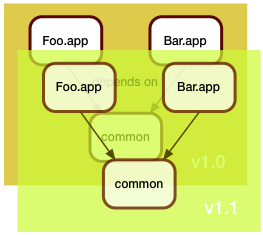

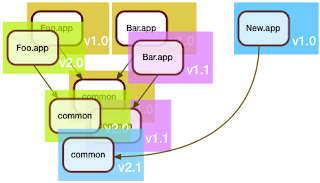

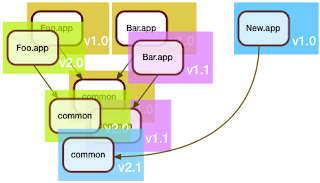

So far, this wasn't so difficult. Where things get interesting is when a brand new service comes into play.

So far, this wasn't so difficult. Where things get interesting is when a brand new service comes into play.

Here, the folks who developed New.app decided that they will be good citizens and merge the shared code in common. "Might as well", they thought, "someone's gotta deal with the tech debt".

Of course, the folks maintaining Foo.app and Bar.app would have none of it: "if it ain't broke, don't fix it", they said, and so the March release looked like this:

I think the best answer is: "all changes between the oldest change seen by the other apps, and the change seen by the new app". Or another way to put it: all changes made by the other apps that are ancestors of the changes made by the new app. This would be:

A single recursive traversal of the build manifests is no longer enough. We need to make two passes.

The first pass will compare existing items vs existing items, each registering the revision ranges used for every repository.

The second pass processes the new items, now using the revision ranges accumulated in the first pass to generate the appropriate git log commands for all the dependencies of each new item.

This method seems to work right in all the corner cases:

Notes:

To demonstrate the challenges involved with modern "agile" processes, let's take a relatively simple scenario: two applications or services, each depending on a piece of shared code.

To demonstrate the challenges involved with modern "agile" processes, let's take a relatively simple scenario: two applications or services, each depending on a piece of shared code.In the good old days, this was somewhat of a no-brainer. All pieces would live in the same source tree, and be built together.

A single version control repository would be used, and a release consists of all components, built once.

A new release consists of rebuilding all pieces and delivering them as a unit. So finding the difference between two releases was a pure version control system operation, and no additional thinking was required.

The challenge in the bad old days was that every single one of your customers probably had a different version of your system, and was both demanding in fixing their bugs, but refusing to upgrade to your latest release. Therefore you had to manage lots of patch branches.

The challenge in the bad old days was that every single one of your customers probably had a different version of your system, and was both demanding in fixing their bugs, but refusing to upgrade to your latest release. Therefore you had to manage lots of patch branches.Still, everything was within the confines of a single version control repository, and figuring out the deltas between two releases was essentially running:

git log <old>..<new>In the software as a service model, you don't have the patching problem. Instead, you have the challenge of getting your fixes out to production as fast and as safely as possible.

The mantra here is: if it ain't broke, don't fix it. As we've seen before, rebuilding and re-releasing an unchanged component already can have risks, and forcing a working component to be rebuilt because of unrelated changes will at least cause delay.

The mantra here is: if it ain't broke, don't fix it. As we've seen before, rebuilding and re-releasing an unchanged component already can have risks, and forcing a working component to be rebuilt because of unrelated changes will at least cause delay.So, to support the new world, we split our single version control repository into separate repositories, one for each component. The components get built separately, and can be released separately.

In this example, Foo.app got updated. This update unfortunately also required a fix to the shared code library. Arguably, Bar.app should be rebuilt to see if the fix broke it, but we are in a hurry (when are we ever not in a hurry?), and since Bar.app is functioning fine as is, we don't rebuild it. We just leave it alone.

In this example, Foo.app got updated. This update unfortunately also required a fix to the shared code library. Arguably, Bar.app should be rebuilt to see if the fix broke it, but we are in a hurry (when are we ever not in a hurry?), and since Bar.app is functioning fine as is, we don't rebuild it. We just leave it alone.As we deploy this to production, we realize we now have two different versions of common there.

That in itself is usually not a problem if we build things right, for example by using "assemblies", using static linking, or just paying attention to the load paths.

I sometimes joke that the first thing everyone does in a C++ project is to override new. The first thing everyone does in a Java project is to write their own class loader. This scenario explains why.

But with this new process, answering the question of "What's new in production?" is no longer a simple version control operation. For one, there isn't a single repository anymore - and then the answer depends on the service or application you're examining.

In this case, the answer would be:

Bar.app: unchangedIn order to divine this somehow, we need to register the exact revisions used for every piece of the build. I personally like build manifests embedded someplace in the deliverable items. These build manifests would include all the dependency information, and would look somewhat like this:

Foo.app: git log v1.0..v2.0 # in Foo.app's repo

git log v1.0..v2.0 # in common's repo

{ "Name": "Foo.app",The same idea can be used to describe the state of a complete release. We just aggregate all the build manifests into a larger one:

"Rev": "v2.0",

"Includes": [ { "Name": "common",

"Rev": "v2.0" } ] }

{ "Name": "January Release",

"Includes: [{ "Name": "Foo.app",

"Rev": "v2.0",

"Includes": [{ "Name": "common",

"Rev": "v2.0" }]},

{ "Name": "Bar.app",

"Rev": "v1.0",

"Includes": [{ "Name": "common",

"Rev": "v1.0" }]}]}

Now the development team around Bar.app wasn't idle during all this time, and they also came up with some changes. They too needed to update the shared code in common.

Now the development team around Bar.app wasn't idle during all this time, and they also came up with some changes. They too needed to update the shared code in common.Of course, they also were in a hurry, and even though they saw that the Foo.app folks were busy tweaking the shared code, they decided that a merge was too risky for their schedule, and instead branched the common code for their own use.

When they got it all working, the manifest of the February release looked like this:

{ "Name": "February Release",It should be easy to see how recursive traversal of both build manifests will yield the answer to the question "What changed between January and February?":

"Includes: [{ "Name": "Foo.app",

"Rev": "v2.0",

"Includes": [{ "Name": "common",

"Rev": "v2.0" }]},

{ "Name": "Bar.app",

"Rev": "v1.1",

"Includes": [{ "Name": "common",

"Rev": "v1.1" }]}]}

Foo.app: unchanged

Bar.app: git log v1.0..v1.1 # in Bar.app's repo

git log v1.0..v1.1 # in common's repo

So far, this wasn't so difficult. Where things get interesting is when a brand new service comes into play.

So far, this wasn't so difficult. Where things get interesting is when a brand new service comes into play.Here, the folks who developed New.app decided that they will be good citizens and merge the shared code in common. "Might as well", they thought, "someone's gotta deal with the tech debt".

Of course, the folks maintaining Foo.app and Bar.app would have none of it: "if it ain't broke, don't fix it", they said, and so the March release looked like this:

{ "Name": "March Release",So, what changed between February and March?

"Includes: [{ "Name": "Foo.app",

"Rev": "v2.0",

"Includes": [{ "Name": "common",

"Rev": "v2.0" }]},

{ "Name": "Bar.app",

"Rev": "v1.1",

"Includes": [{ "Name": "common",

"Rev": "v1.1" }]},

{ "Name": "New.app",

"Rev": "v1.0",

"Includes": [{ "Name": "common",

"Rev": "v2.1" }]}]}

Foo.app: unchangedThe first part of the answer is easy. Since New.app's repository is brand new, it only makes sense to include all changes.

Bar.app: unchanged

New.app: ????

Foo.app: unchangedOne can make a reasonable argument that from the point of view of New.app, all the changes in common are also new, so they should be listed. In practice, though, this could be a huge list, and wouldn't really be that useful, as most changes would be completely unrelated to New.app, and also would be unrelated to anything within the new release. We need something better.

Bar.app: unchanged

New.app: git log v1.0 # in New.app's repository

and then ????

I think the best answer is: "all changes between the oldest change seen by the other apps, and the change seen by the new app". Or another way to put it: all changes made by the other apps that are ancestors of the changes made by the new app. This would be:

Foo.app: unchangedBut how do we code this?

Bar.app: unchanged

New.app: git log v1.0 # in New.app

git log v2.1 ^v2.0 ^v1.1 # in common

A single recursive traversal of the build manifests is no longer enough. We need to make two passes.

The first pass will compare existing items vs existing items, each registering the revision ranges used for every repository.

The second pass processes the new items, now using the revision ranges accumulated in the first pass to generate the appropriate git log commands for all the dependencies of each new item.

This method seems to work right in all the corner cases:

- If a dependency is brand new, it won't get traversed by any other app, so the list of revisions to exclude in the git log command (the ones prefixed with ^) will be empty, resulting in a git command to list all revisions - check.

- If the new app uses a dependency as is, not modified by any other app, then the result will be revision ranges bound by the same revisions, thereby producing no new changes - check.

Notes:

- In the examples here, I used tags instead of git revision hashes. This was simply done for clarity. In practice, I would only ever apply tags at the end of the release process, never earlier. After all, the goal is to create tags reflecting the state as it is, not as it should be.

- The practice of manually managing your dependencies has unfortunately become quite common in the java ecosystem. Build tools like maven and ivy support this process, but it has a large potential to create technological debt, as the maintainers of individual services can kick the can down the road for a long time before having to reconcile their use of shared code with other folks. Often, this only happens when a security fix is made, and folks are forced to upgrade, and then suddenly all that accumulated debt comes due. So if you wonder why some well known exploits still work on some sites, that's one reason...

- Coding the traversal makes for a nice exercise and interview question. It's particularly tempting to go for premature optimization, which then comes back to bite you. For example, it is tempting to skip processing an item where the revision range is empty (i.e. no change), but in that case you might also forget to register the range, which would result in a new app not seeing that an existing app did use the item...

- Obviously, collecting release notes is more than just collecting commit comments, but this is, I think, the first step. Commit comments -> Issue tracker references -> Release Notes. The last step will always be a manual one, after all, that's where the judgement comes in.

Tuesday, April 2, 2013

What Is vs What Should Be

Along the lines of "What is it We Do Again?":

It's therefore very important to always locate the source of truth for any statement you wish to make.

For example, in my previous post describing my tagging process, I do something which appears to be bizarre: I go and retrieve build manifests from the actual production sites and use those as my source of truth for tagging the source trees.

The reason I do it that way is that I have a fairly bullet-proof chain of evidence to prove that the tagged revisions truly are in production:

Consider common alternatives:

The complexity of modern software as a service environments isn't going away, and we will need to get used to the idea of many versions of the same thing co-existing in a single installation. The fewer assumptions made in the process of presenting the state of these installations, the better.

- Product Management is all about what should be;

- Release Management is all about what is.

- You build a piece of software after a developer commits a fix. The bug should be fixed. Is it?

- You deploy a war file to a tomcat server. The service should be updated. Is it?

- ...

It's therefore very important to always locate the source of truth for any statement you wish to make.

For example, in my previous post describing my tagging process, I do something which appears to be bizarre: I go and retrieve build manifests from the actual production sites and use those as my source of truth for tagging the source trees.

The reason I do it that way is that I have a fairly bullet-proof chain of evidence to prove that the tagged revisions truly are in production:

- The build manifests are created at build time, so they reflect the revisions used right then;

- The build manifests are packaged and travel along with every deploy;

- The build manifests can only get to the target site via a deploy;

- The build manifests contain hash signatures that can be run against the deployed files to validate that the manifest matches up with the file.

Consider common alternatives:

- Tag at build time. Problem becomes identifying the tag actually deployed to production - so this doesn't really solve anything.

- Rebuild for deploy. Problem becomes identifying the source tree configs used by QA. In addition, can you really trust your build system to be repeatable?

- They can release pieces incrementally as they pass QA;

- They can try different combinations of builds;

- They can roll back to previous builds;

The complexity of modern software as a service environments isn't going away, and we will need to get used to the idea of many versions of the same thing co-existing in a single installation. The fewer assumptions made in the process of presenting the state of these installations, the better.

Monday, April 1, 2013

Why Don't You Just Tag Your Releases?

In my experience, any utterance beginning with the words "why don't you just..." can be safely ignored.

Then again, ignoring isn't always an option...

So, why don't we just tag the released code?

Back in the days, when software was a small set of executable programs linked from a small set of libraries, this was a simple thing to do. Usually, the whole build was done from a single source tree in one pass, and there never was any ambiguity over which version of each file was used in a build.

The modern days aren't that simple anymore. These days, we build whole suites of services, built from a large set of libraries, often using many different versions of the same library at the same time. Why? mainly because folks don't care to rebuild working services just because one or two dependencies changed.

Furthermore, we also use build systems like maven, based on ivy and similar artifact management tools, and other packaging tools which allow anyone to specify precisely which version of a piece of shared code they wish to use for their particular service or executable. As a side effect, we also get faster builds simply because we avoid rebuilding many libraries and dependencies.

Most people will opt for the "what I know can't hurt me, so why take a chance" approach and resist upgrading dependencies until forced to do so, either because they wish to use a new feature, or for security reasons.

Therefore, in any production environment, you will see many different versions of the same logical entity used at the same time, so tagging a single revision in the source tree of a shared piece of code is impossible. Many revisions need to be tagged.

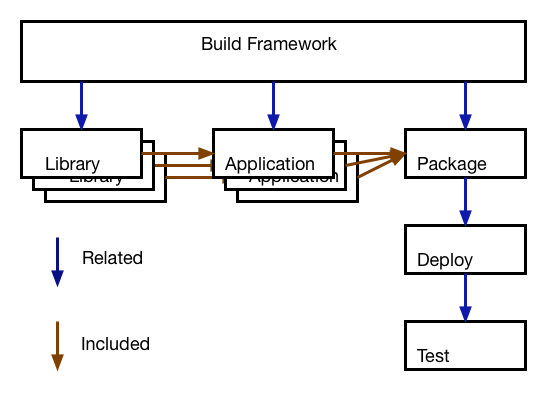

So here's what I currently do:

First, I use Build Manifests. These contain both the dependency relationship between various build objects, and their specific VCS revision ids.

Next, I identify the top level items. These are usually the pieces delivering the actual shippable item, either a service or an executable, or some other package. Every one of these top level items will have a unique human readable name, and a version. This is what I use as the basic tag name.

So my tag names end up looking like this:

The date stamp is essentially just there to easily sort the tags and group related versions of related services together, and also to keep tags unique and help locate any bugs in the tagging process. They could be omitted in a perfect world.

With this I run my tagger once a day, retrieving the build manifests from the final delivery area (could be our production site, could be our download site, or wherever the final released components live). We do this to act as a cross-check for the release process. If we find something surprising there, then we know our release process is broken someplace.

The tagger will start with every top level item and generate the tag name, then traverse the dependency list, adding an id for every dependency build used in the top level item. Unless the revision used to build the dependency has already been tagged, it will get the tag with the dependency path.

When checking whether a specific revision is already tagged, I deliberately ignore the date portion and the dependency path portions of the tag and only check the name and version part. This will avoid unnecessary duplication of tags.

In the end, you will get:

Updated: grammar, formatting and clarity.

Then again, ignoring isn't always an option...

So, why don't we just tag the released code?

Back in the days, when software was a small set of executable programs linked from a small set of libraries, this was a simple thing to do. Usually, the whole build was done from a single source tree in one pass, and there never was any ambiguity over which version of each file was used in a build.

The modern days aren't that simple anymore. These days, we build whole suites of services, built from a large set of libraries, often using many different versions of the same library at the same time. Why? mainly because folks don't care to rebuild working services just because one or two dependencies changed.

Furthermore, we also use build systems like maven, based on ivy and similar artifact management tools, and other packaging tools which allow anyone to specify precisely which version of a piece of shared code they wish to use for their particular service or executable. As a side effect, we also get faster builds simply because we avoid rebuilding many libraries and dependencies.

Most people will opt for the "what I know can't hurt me, so why take a chance" approach and resist upgrading dependencies until forced to do so, either because they wish to use a new feature, or for security reasons.

Therefore, in any production environment, you will see many different versions of the same logical entity used at the same time, so tagging a single revision in the source tree of a shared piece of code is impossible. Many revisions need to be tagged.

So here's what I currently do:

First, I use Build Manifests. These contain both the dependency relationship between various build objects, and their specific VCS revision ids.

Next, I identify the top level items. These are usually the pieces delivering the actual shippable item, either a service or an executable, or some other package. Every one of these top level items will have a unique human readable name, and a version. This is what I use as the basic tag name.

So my tag names end up looking like this:

<prefix>-<YYYY-MM-DD>-<top-level-name>-<version>[+<dependency-path>]

The date stamp is essentially just there to easily sort the tags and group related versions of related services together, and also to keep tags unique and help locate any bugs in the tagging process. They could be omitted in a perfect world.

With this I run my tagger once a day, retrieving the build manifests from the final delivery area (could be our production site, could be our download site, or wherever the final released components live). We do this to act as a cross-check for the release process. If we find something surprising there, then we know our release process is broken someplace.

The tagger will start with every top level item and generate the tag name, then traverse the dependency list, adding an id for every dependency build used in the top level item. Unless the revision used to build the dependency has already been tagged, it will get the tag with the dependency path.

When checking whether a specific revision is already tagged, I deliberately ignore the date portion and the dependency path portions of the tag and only check the name and version part. This will avoid unnecessary duplication of tags.

In the end, you will get:

- One tag for every top level source tree

- At least one tag for every dependency. You might get many tags in any specific shared code source tree, depending on how the dependency was used in the top level item. My current record is three different revisions of the same piece of shared code used in the same top level item. Dozens of different revisions are routinely used in one production release (usually containing many top level items).

Updated: grammar, formatting and clarity.

Wednesday, March 27, 2013

Git Tagging Makes Up for a Lot!

In the past, I've been rather indifferent both to git and to tagging. My main complaint about how tagging works in most other VCS systems is that it makes it too easy for folks to keep moving the tags around.

It is therefore with great joy that I read the following in the help text for "git tag":

I am now a git convert. Yay! ...and how do you do moving tags? Well, you don't! Use a branch instead, and fast forward it to whatever location you want to go. Want to go backwards, well, don't. Branch out and revert, and later use "git merge -s ours" to re-unite the moving branch to its parent.

It is therefore with great joy that I read the following in the help text for "git tag":

On Re-tagging

What should you do when you tag a wrong commit and you

would want to re-tag?

If you never pushed anything out, just re-tag it.

Use "-f" to replace the old one. And you're done.

But if you have pushed things out (or others could

just read your repository directly), then others will

have already seen the old tag. In that case you can

do one of two things:

1. The sane thing. Just admit you screwed up, and

use a different name. Others have already seen

one tag-name, and if you keep the same name,

you may be in the situation that two people both

have "version X", but they actually have different

"X"'s. So just call it "X.1" and be done with it.

2. The insane thing. You really want to call the new

version "X" too, even though others have already seen

the old one. So just use git tag -f again, as if you

hadn't already published the old one.

However, Git does not (and it should not) change tags behind

users back. So if somebody already got the old tag, doing a

git pull on your tree shouldn't just make them overwrite the

old one.

If somebody got a release tag from you, you cannot just change

the tag for them by updating your own one. This is a big

security issue, in that people MUST be able to trust their tag

names. If you really want to do the insane thing, you need

to just fess up to it, and tell people that you messed up.

You can do that by making a very public announcement saying:

Ok, I messed up, and I pushed out an earlier version

tagged as X. I then fixed something, and retagged the

*fixed* tree as X again.

If you got the wrong tag, and want the new one, please

delete the old one and fetch the new one by doing:

git tag -d X

git fetch origin tag X

to get my updated tag.

You can test which tag you have by doing

git rev-parse X

which should return 0123456789abcdef..

if you have the new version.

Sorry for the inconvenience.

Does this seem a bit complicated? It should be. There is no way

that it would be correct to just "fix" it automatically. People

need to know that their tags might have been changed.

I am now a git convert. Yay! ...and how do you do moving tags? Well, you don't! Use a branch instead, and fast forward it to whatever location you want to go. Want to go backwards, well, don't. Branch out and revert, and later use "git merge -s ours" to re-unite the moving branch to its parent.

Tuesday, February 19, 2013

Dirty Release Branch

In the past, I've presented a branching model that can be termed as the "clean" branching model. The basic idea was you fixed a bug or implemented a feature in the oldest, still active release branch, and merged forward.

In this post, I'll present the "dirty" branch model, where we do the opposite, kind of. This method seems to be quite popular among git users, with their emphasis on patches, and git's built-in support for handling patches.

In this model, all fixes and features are always first introduced on the main branch. A release branch is cut at some convenient moment, and is treated as a dead end branch. You do "whatever it takes" to slog the code into a releasable shape, knowing that none of the last minute hacks or reversions will be merged back into the main branch.

Bug fixes which affect ongoing development must be made on the main branch, and cherrypicked or patched into the release branch.

When the release is done, the branch is closed. A new branch including all the latest features is then created, and the hack/revert process begins again.

The advantages of this model are:

There is, of course, a major drawback: refactoring code will make the patch process difficult to use.

This process works well for mature code bases that run mostly in maintenance mode.

[update] slight grammar fixup.

In this post, I'll present the "dirty" branch model, where we do the opposite, kind of. This method seems to be quite popular among git users, with their emphasis on patches, and git's built-in support for handling patches.

In this model, all fixes and features are always first introduced on the main branch. A release branch is cut at some convenient moment, and is treated as a dead end branch. You do "whatever it takes" to slog the code into a releasable shape, knowing that none of the last minute hacks or reversions will be merged back into the main branch.

Bug fixes which affect ongoing development must be made on the main branch, and cherrypicked or patched into the release branch.

When the release is done, the branch is closed. A new branch including all the latest features is then created, and the hack/revert process begins again.

The advantages of this model are:

- You can revert at will. Nobody cares, and there is no risk of the reversion being merged back into an ongoing development branch.

- There are no big merges. This comes at the expense of occasional difficulties applying patches correctly, especially if those patches rely on reverted or non-existent code in the release branch.

- You can do quick hacks ("whatever it takes") and be safe in the knowledge that their lifespan will not extend past the current release.

- The change history along the main branch is cleaner and more linear, as there are no merge commits.

There is, of course, a major drawback: refactoring code will make the patch process difficult to use.

This process works well for mature code bases that run mostly in maintenance mode.

[update] slight grammar fixup.

Subscribe to:

Posts (Atom)